You can evaluate the compatibility of shared prompts across two LLM APIs by comparing their outputs on identical inputs using similarity metrics.

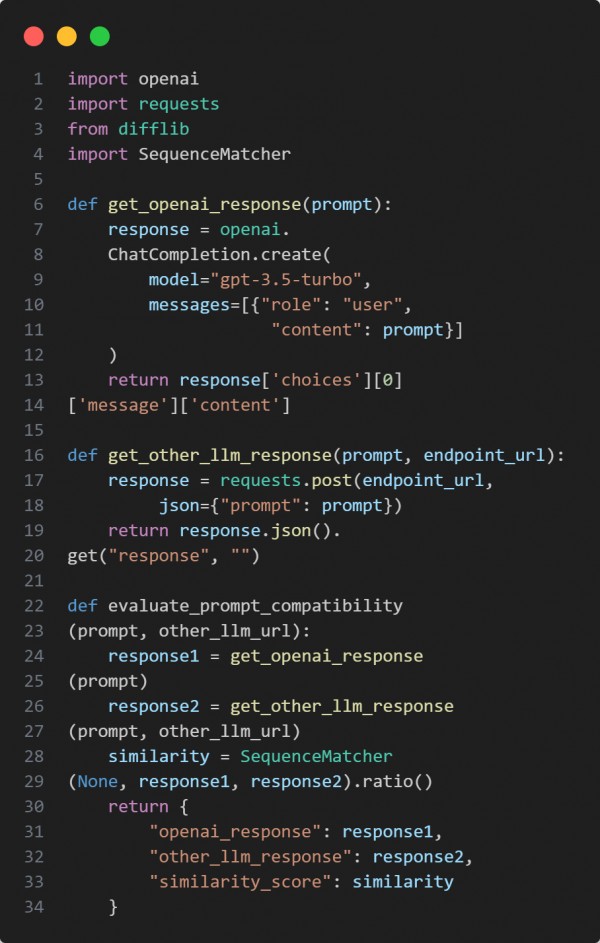

Here is the code snippet below:

In the above code we are using the following key points:

-

OpenAI's API to generate a response from GPT-based model.

-

A generic POST request to call another LLM via its API endpoint.

-

SequenceMatcher to compute textual similarity between both responses.

Hence, this method enables a practical comparison framework to assess prompt behavior consistency across multiple LLM platforms.

REGISTER FOR FREE WEBINAR

X

REGISTER FOR FREE WEBINAR

X

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP