To set up a TPU cluster in Google Cloud, configure a TPU-enabled VM via gcloud, install dependencies, and run a TensorFlow program using the TPU strategy.

Here are the steps you can refer to:

1: Create a TPU-enabled VM on Google Cloud

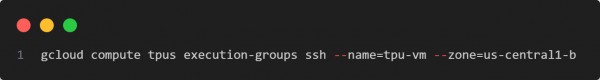

2: SSH into the VM

3: Run TensorFlow code on TPU

In the above code we are using the following key points:

-

Uses TPUClusterResolver to connect to GCP TPU hardware.

-

Initializes TPU system and wraps training in TPUStrategy.

-

Demonstrates a basic Keras model training on synthetic data.

-

Utilizes Google Cloud CLI to automate TPU VM provisioning.

Hence, by provisioning a TPU-enabled VM on Google Cloud and utilizing TensorFlow’s TPU APIs, this script demonstrates efficient setup and execution of distributed deep learning on TPUs.

REGISTER FOR FREE WEBINAR

X

REGISTER FOR FREE WEBINAR

X

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP