Normalizing embeddings ensures uniform vector scales, improving cosine similarity-based matching for unseen classes in One-Shot Learning.

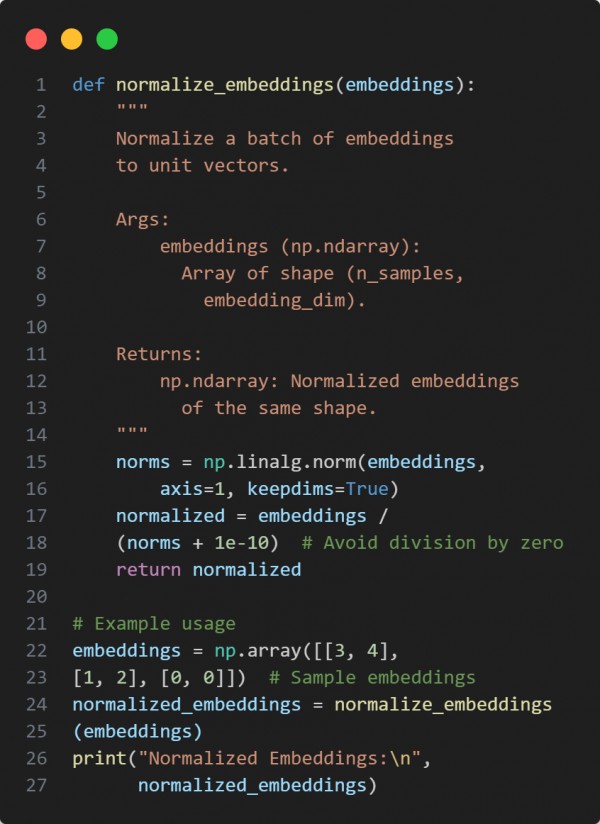

Here is the code snippet you can refer to:

In the above code, we are using the following key points:

-

Uses L2 normalization to scale vectors to unit length.

-

Handles edge cases (e.g., zero vectors) safely using epsilon.

-

Enhances similarity comparison for matching queries and support samples.

Hence, normalization aligns embedding magnitudes, enabling reliable similarity comparisons in One-Shot Learning tasks.

REGISTER FOR FREE WEBINAR

X

REGISTER FOR FREE WEBINAR

X

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP