You can create an end-to-end QLoRA fine-tuning pipeline using PyTorch Lightning by integrating Hugging Face Transformers, peft, and quantization-aware training modules for efficient fine-tuning of large language models.

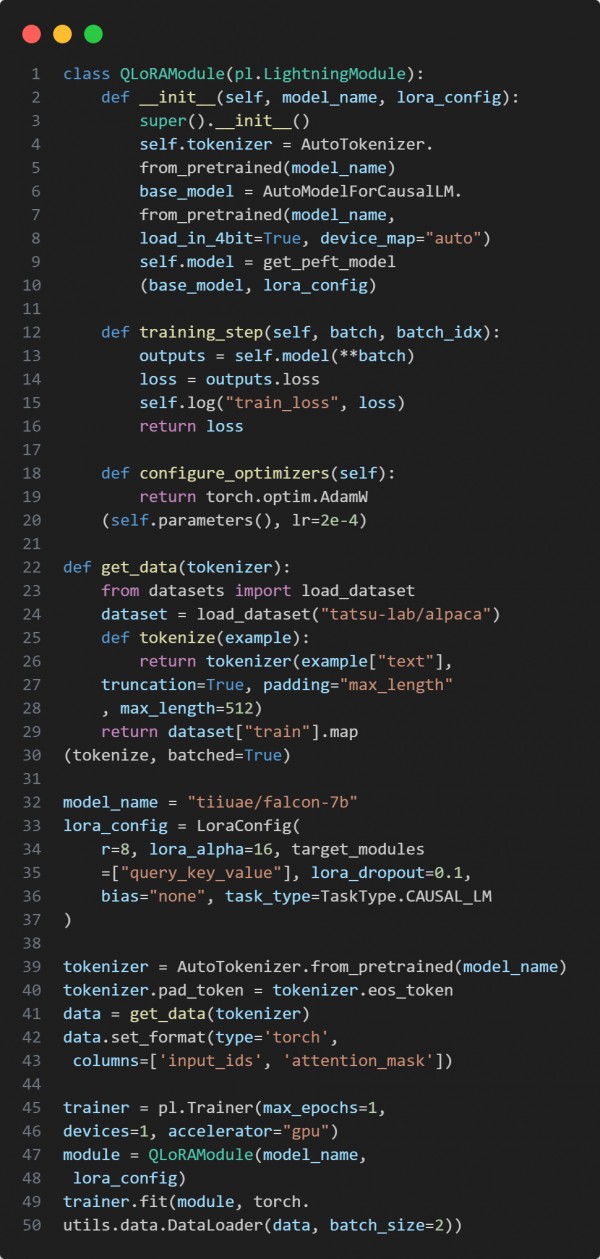

Here is the code snippet you can refer to:

In the above code we are using the following key strategies:

-

Uses QLoRA with Hugging Face peft for memory-efficient fine-tuning.

-

Leverages PyTorch Lightning for clean training abstraction.

-

Supports 4-bit quantization for large models like Falcon-7B.

-

Integrates Hugging Face Datasets for easy data loading and preprocessing.

Hence, QLoRA fine-tuning using PyTorch Lightning offers a scalable, memory-efficient, and modular approach to adapt large models with minimal code complexity.

REGISTER FOR FREE WEBINAR

X

REGISTER FOR FREE WEBINAR

X

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP