You can implement static vs dynamic sharding for TPU datasets using tf.data.Dataset.shard() for static sharding and letting TPUStrategy handle dynamic sharding automatically.

Here is the code snippets you can refer to:

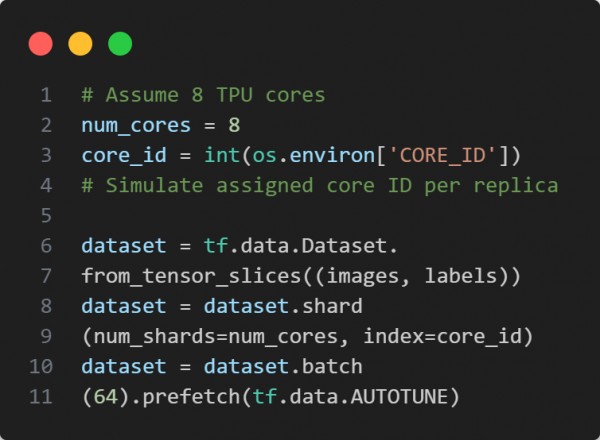

Static Sharding

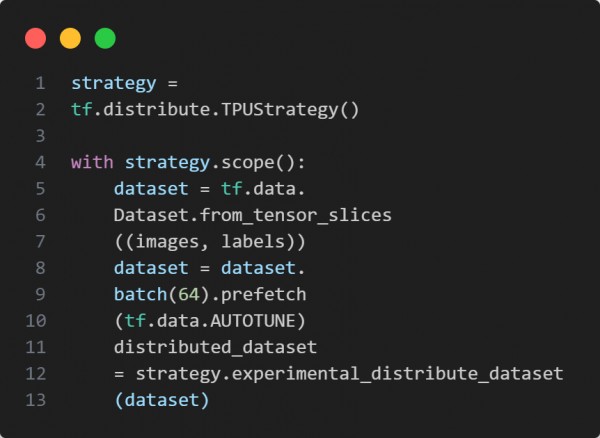

Dynamic Sharding

In the above code, we are using the following key points:

-

Static Sharding (shard()): Manually divides data by core, giving precise control.

-

Dynamic Sharding: TPUStrategy automatically partitions data across TPU replicas.

-

experimental_distribute_dataset(): Enables seamless scaling across TPUs.

-

prefetch(): Optimizes throughput regardless of sharding method.

Hence, static sharding gives granular control over data distribution, while dynamic sharding simplifies parallelism, allowing TPUs to handle dataset partitioning efficiently based on available resources.

REGISTER FOR FREE WEBINAR

X

REGISTER FOR FREE WEBINAR

X

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP