You can design a NAS pipeline to optimize the Transformer block for text generation by searching over architectural variants using a controller model and reinforcement learning.

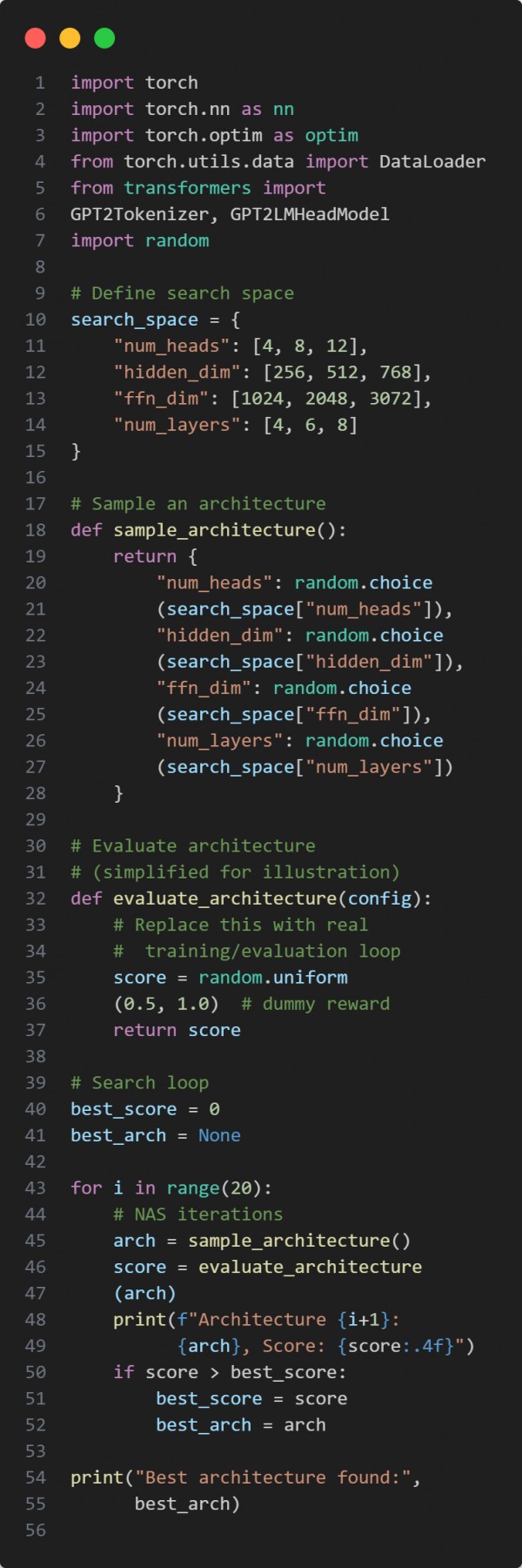

Here is the code snippet below:

In the above code, we are using the following key points:

-

search_space defines different Transformer configurations.

-

sample_architecture picks random combinations for exploration.

-

evaluate_architecture provides a placeholder for model training and scoring.

Hence, this pipeline helps automatically discover efficient Transformer designs tailored for text generation.

REGISTER FOR FREE WEBINAR

X

REGISTER FOR FREE WEBINAR

X

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP