You can fine-tune Transformer hyperparameters by using a Bayesian optimizer like Optuna to efficiently search the hyperparameter space.

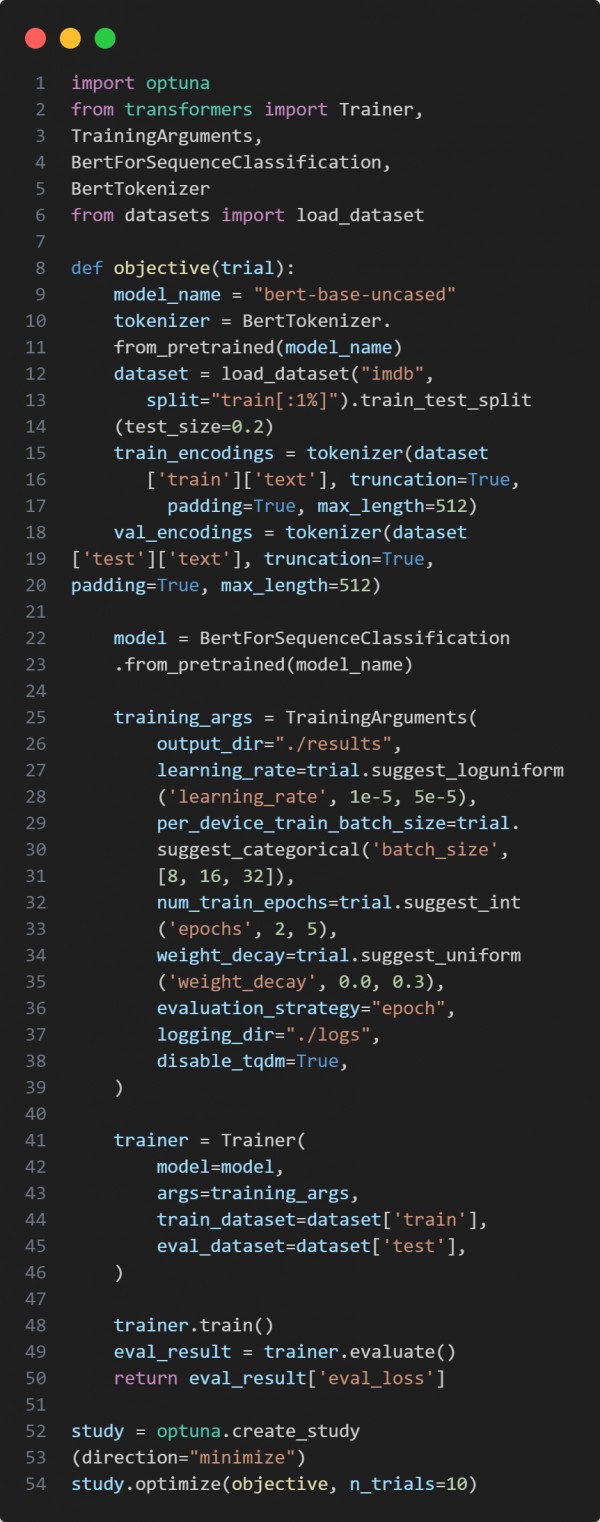

Here is the code snippet below:

In the above code we are using the following key points:

-

Optuna’s trial object to sample hyperparameters like learning rate, batch size, epochs, and weight decay.

-

Hugging Face Trainer API to easily manage training and evaluation.

-

IMDB dataset as a sample text classification task.

Hence, this allows efficient and intelligent exploration of hyperparameters to improve model performance with minimal manual tuning.

REGISTER FOR FREE WEBINAR

X

REGISTER FOR FREE WEBINAR

X

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP