You can implement a Zero Redundancy Optimizer (ZeRO) for large model training by partitioning optimizer states across data-parallel processes to minimize memory use.

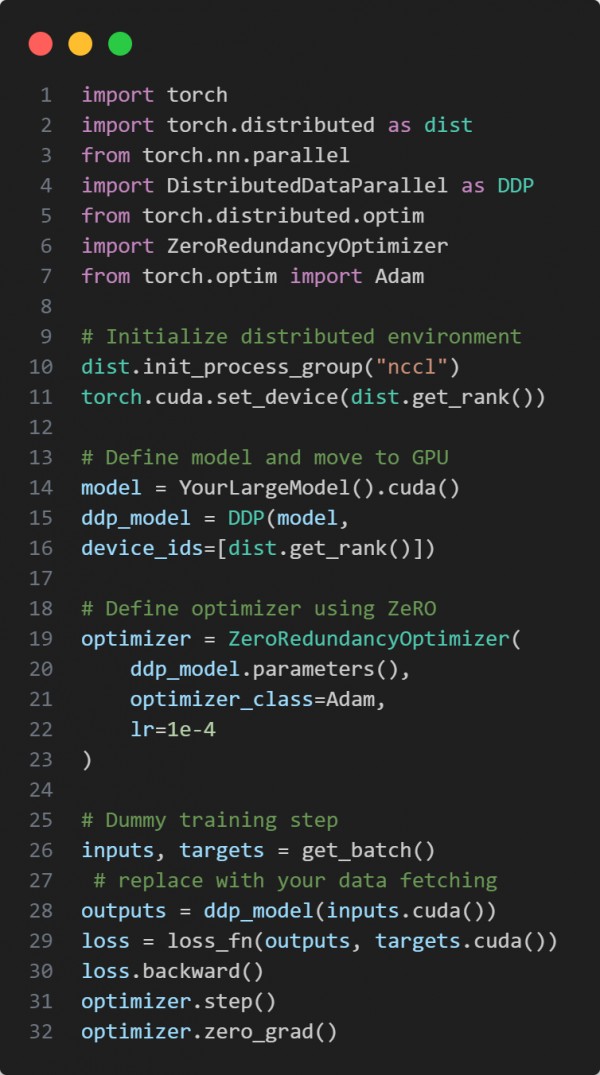

Here is the code snippet below:

In the above code we are using the following key points:

-

ZeroRedundancyOptimizer from PyTorch’s distributed library.

-

DDP (DistributedDataParallel) for synchronized training.

-

Efficient memory usage by sharding optimizer states across GPUs.

Hence, ZeRO allows scaling of massive models efficiently by optimizing memory and computational distribution across GPUs.

REGISTER FOR FREE WEBINAR

X

REGISTER FOR FREE WEBINAR

X

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP